I recently discovered a robot called SEER, which can copy your facial expressions in real-time. This is called the “Simulative Emotional Expression System,” and many companies making robots are trying to do the same thing.

It’s important because it blurs the line between humans and machines, especially for robots that are supposed to be companions for people. However, SEER’s technology can cost a lot of money.

I want to create a basic, low-cost, and open-source version of this technology so that new engineers in robotics can use it and make their own systems. This is a big project, so I’ll break it down into two parts.

First, we’ll learn how to track the movements of a person’s face, and then we’ll figure out how to make a robot copy those expressions.

For the first part, here’s what we’ll need:

Bill of Materials

| Components | Quantity | Description | Price |

| Raspberry pi/ Laptop | 1 | 4GB Grater Ram | 4000 |

| Camera | 1 | Raspebrry Pi DSI Camera | 3000 |

| Servo Motors | 4 | Micro Servo | 800 |

Code for Facial Expression Recognition

First, you’ll need to set up your Python environment. Make sure you have Python 3 or a newer version installed, along with an Integrated Development and Learning Environment (IDLE) like Thonny, Genny, or IDLE3.

Next, you’ll need to install the necessary modules and libraries for your coding project:

Numpy: This helps translate facial expressions into numerical arrays and calculate distances between facial landmark points. To install Numpy, open your terminal and run:

sudo pip3 install numpy

Dlib: This assists in extracting facial landmarks from the face. To install Dlib, use:

sudo pip3 install dlib

OpenCV: This is useful for capturing real-time video and processing faces. To install OpenCV, run:

sudo pip3 install opencv

Pyserial: You’ll need this library to send commands and values to a servo controller device for mimicking recognized facial expressions.

Once you’ve installed all these components, you’re ready to start coding.

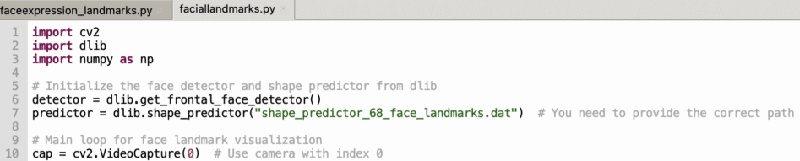

Begin by opening Python IDLE3 and importing the necessary libraries and modules, including Numpy, Dlib, and OpenCV.

Also, set the path for the face landmark predictor model, which should be in “.dat” format and can be downloaded from GitLab.

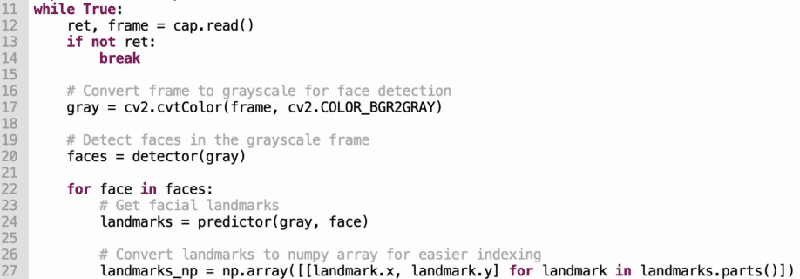

Next, create a while loop to capture real-time video frames and process them.

Here’s a basic outline of the steps:

- Convert the image frame from BGR to grayscale for faster processing.

- Resize the frame to a smaller size.

- Use the facial landmarks predictor model to predict landmarks on the image using Dlib.

- Convert these landmarks into a numerical array using NumPy.

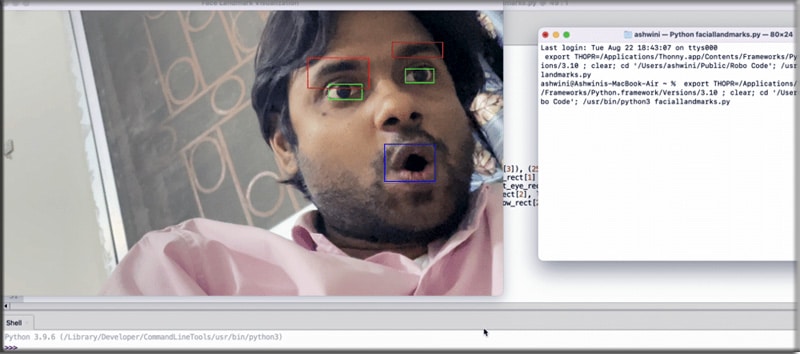

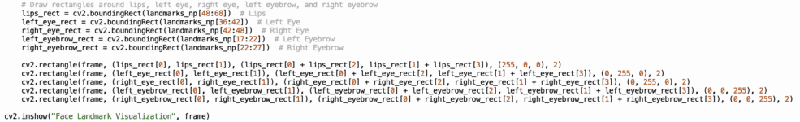

Once you’ve captured the landmarks, you can extract specific parts of the face from the array, like the left eye, right eye, left eyebrow, right eyebrow, lips, etc.

You can also include additional landmarks like cheeks, chin, nose, etc., for more precise facial expression simulation in robots.

Finally, you can track changes and positions of these landmarks in real time as they move when you raise an eyebrow, blink, or move your lips. This tracking data can be used to simulate facial expressions in your robot project.

Testing

Execute the code, and a video will display, tracking your facial landmarks in real-time. As you move your face quickly and make different expressions, the system will capture and display these changes instantly.

Note:-Translating the Landmarks and servomotor calibration will be carried out in the next part. We will update you soon… you can subscribe to the EFY newsletter for this.

Till then…You can check our other facial recognition robots