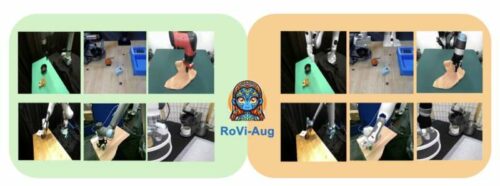

Researchers at UC Berkeley have developed RoVi-Aug, a computational framework that augments robotic data, enabling easier skill transfer between different robots.

In recent years, roboticists have created a variety of systems aimed at performing real-world tasks, from household chores to package delivery and locating specific objects in controlled environments. A major goal in this field is to develop algorithms that enable the transfer of specific skills across robots with varying physical characteristics, facilitating quick training on new tasks and expanding their functional capabilities.

Researchers at UC Berkeley have introduced RoVi-Aug, a novel computational framework that augments robotic data to streamline the transfer of skills between different robots. This process generates synthesized visual task demonstrations from multiple camera perspectives for various robots, facilitating easier skill transfer.

The researchers discovered that many prominent robot training datasets, including the widely-used Open-X Embodiment (OXE) dataset, are unbalanced. The OXE dataset, known for its diverse demonstrations of different robots performing various tasks, tends to feature a disproportionate amount of data for certain robots, like the Franka and xArm manipulators, compared to others.

The new robot data augmentation framework developed by the researchers, RoVi-Aug, leverages advanced diffusion models. These computational models are capable of augmenting images of a robot’s trajectories to generate synthetic images. These images depict different robots performing tasks from various viewpoints, enhancing the framework’s utility for diverse robotic applications.

The researchers utilized their framework, RoVi-Aug, to create a dataset filled with a variety of synthetic robot demonstrations. They then used this dataset to train robot policies that enable the transfer of skills to new robots through zero-shot learning, where the robots learn tasks they have not previously encountered. Notably, these robot policies are also capable of fine-tuning for progressively improved task performance. In contrast to the Mirage model from their previous research, their new algorithm can accommodate significant changes in camera angles.

Collecting large amounts of real-world robot demonstrations can be costly and time-consuming, making RoVi-Aug a cost-effective alternative for compiling reliable robot training datasets quickly. Although the images in these datasets are synthetic, generated by AI, they can still be effective in producing reliable robot policies.

Reference: Lawrence Yunliang Chen et al, RoVi-Aug: Robot and Viewpoint Augmentation for Cross-Embodiment Robot Learning, arXiv (2024). DOI: 10.48550/arxiv.2409.03403